Make the Most of Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is emerging as the de facto architecture for building applications with LLMs. There are three layers to an LLM app:

- The LLM itself. Examples: Llama 2, GPT4, etc.

- Business data

- Business logic

Most modern LLMs are trained on datasets that roughly contain the whole text available on the web. This makes these LLMs great for generic tasks that involve language understanding, reasoning over text data, etc.

However, most business use cases involve internal business data. For example, to build a custom medical chatbot assistant for a hospital, you would need to supply proprietary medical data that only the hospital has access to and is not available on the web. Similarly, to build a devops assistant for your infrastructure, you would need to supply your internal infrastructure data to the LLM.

This is where RAG comes in. The basic idea behind RAG is to extend the context of the core LLM with business data and business logic to build a useful business app.

That said, the question “should I adopt a RAG architecture?” does not make sense anymore. If you want to use an LLM to perform business-related tasks like question answering, recommendations, providing assistance, etc., then you HAVE to use some sort of RAG.

That is the line of thinking one should have about RAG. Now, let’s dig deeper into the specifics:

- Extend LLM context to private data: As mentioned before, LLMs are trained on public datasets collected from the internet; hence, they never saw your proprietary medical documents or industrial designs.

- Enhance accuracy and reliability: Some tasks are generic and can be performed by an LLM without additional context data. However, adding that private context data would greatly improve:

- Accuracy: the LLM would answer more questions correctly when additional context is provided.

- Reliability: LLMs hallucinate, and there is nothing we can do to completely eliminate that. Even the best and largest LLMs out there would spit nonsense at times. Grounding the LLM’s answers in context data works like a guardrail against hallucinations.

- Accuracy: the LLM would answer more questions correctly when additional context is provided.

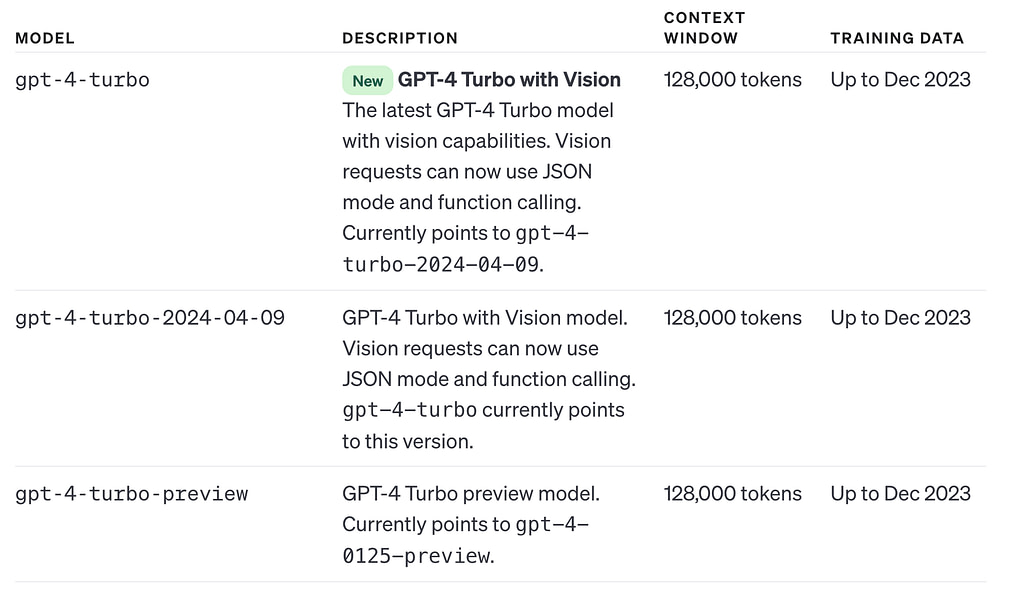

- Provide up-to-date information: LLMs are trained once, then put in inference mode after that. Hence, the data cut-off is a major characteristic of an LLM. As of today, the latest training data for OpenAI models, for example, dates back to December 2023. The consequence is that these models are pretty useless in answering anything around the recent Iran-Israel events, for example (date: April 13, 2024). To augment GPT4 with this data, you would need to hook it to real-time news articles, for example.

4. Enhance user trust: Say you ask GPT4, “What is a major event that happened on October 7th, 2023?”. Since the data cut-off is December 2023, GPT4 may answer, “A missile attack was launched on Israel at 7 a.m.,” for example. However, although the main answer is correct, the specifics (missile attack, 7 a.m.) need some support to be trusted. RAG allows you to provide such support by ingesting news articles, prompting GPT4 to answer from these articles and cite their sources.

Some epic LLM hallucinations and bad citation examples include this one:

In the legal case of Mata v. Avianca, a New York attorney used ChatGPT for legal research, which led to the inclusion of fabricated citations and quotes in a federal court case. The attorney, Steven Schwartz, admitted to using ChatGPT, which highlights the direct consequences of relying on AI-generated content without grounding in context data (and without verification either in this case).

5. Reduce costs: This is more relevant in the case of deploying and managing your own LLM. Since RAG extends the LLM knowledge and context with external data, you remove the need for retraining or fine-tuning the LLM based on recent data. You also don’t need to fine-tune with your proprietary datasets. Training and fine-tuning LLMs can be very costly and may take days to months to complete.

Another interesting aspect of RAG is that you are technically offloading the retrieval step from internal LLM retrieval (KV cache) to external retrieval. Recent LLMs have a larger context, which allows them to ingest large data segments and ask questions about them; however, inferences on large chunks of data are both slower and costlier than external RAG retrieval.

6. Improve Speed:

As we already explained, when data is larger than what we can input to the LLM, we don’t have options but to use RAG. Now, say you have a large context LLM, like Gemini 1.5 with a 1 million token context window, then you may think of giving it all the data at once (if less than 1 million tokens). In this case, inference would take a long time and cost you much more compared to doing external RAG retrieval.

Some examples of RAG systems in production

Perplexity (.ai): Perplexity is a good example of a web RAG. It is an answer engine that answers user queries from the web and cites sources.

Cursor (.sh): Cursor is a great coding assistant. It is a RAG system that indexes your code base, allowing you to ask questions about your code and get contextual answers.

HeyCloud (.ai): HeyCloud is a specialized AI assistant for DevOps teams. It integrates with your cloud stack (like AWS or Kubernetes) and allows you to ask DevOps and cloud-related questions like:

- How many users on my AWS account have permissions to see billing information?

- How much do I spend on EC2 in the London region per month?

- Create an EKS cluster with 20 nodes for under $100 per month.

Conclusion

In this article, we went through the main reasons RAG is relevant (or necessary) in almost any LLM app. One more thing I want to say here: always start simple. RAG systems can quickly get out of control and become untraceable; start with a tiny part of the data, preferably with an API-provided LLM like GPT4. Once you have the first toy version complete and a baseline performance, then start adding more advanced algorithms and strategies.