Curious about prompt engineering? Strategies to make LLMs work for you.

Large language models (LLMs) are excellent tools with considerable power. Especially when it has abilities including processing and understanding human language. It might be able to work even better with technical know-how and a nuanced approach to prompt engineering. If anything, prompt engineering will make LLM work to your specific needs and provide you with the best insights possible.

The guide we’ll be delving into will discuss prompt engineering and why it’s so important for LLMs.

Understanding Prompt Engineering

Prompt engineering can be viewed as the act of crafting queries to present to large language models (LLMs) in order to elicit a user’s desired results. If one wishes to achieve the full effect of these AI models, knowing how to do this will be necessary. Why is this way of working—prompting, in the simplest terms—so central to the use of LLMs? What does it require in return?

The Role of Prompts in LLMs

Prompts are given to LLMs so that they provide users with a response. LLMs can perform a task based on how well the prompt is put together. Accurate, relevant, and insightful outputs will be the results of a well-engineered prompt. Contrast that with a prompt that is not as well-designed and the output will be less accurate or even generic.

Strategies for Effective Prompt Engineering

For prompt engineering to be effective, there are a few strategies to put into practice. These include but are not limited to the following.

- Clarity and Specificity: Clear and specific prompts will win out over vague ones nine times out of ten. In fact, it’s no contest who wins here.

- Contextual Information: Providing the model with just the right amount of context will greatly enhance its performance. The model can go to work on the prompt only when it has sufficient background information. It also helps if we specify the format we want the output in.

- Iterative Refinement: When crafting the ideal prompt, it will be beneficial to use iteration. You should experiment with the different versions you create, observe the model’s responses, and then modify what you’ve written in light of those responses.

Challenges in Prompt Engineering

Yes, prompt engineering does have its challenges and overcoming them is possible. What exactly are they? Let’s take a look at what they are.

Model Limitations

Just because advanced LLMs are higher level than others, they are not without limitations of their own. While they do excel in some places, they have weaknesses in others. Be sure to identify these limitations and set realistic expectations. Create prompts that will play off of the LLM’s strong suits.

Overfitting and Underfitting

Like other branches of machine learning, prompt engineering can experience overfitting and underfitting. A situation occurs when a prompt is overly specific and too tailored. It is not necessary for a model’s response to be tailored to the same degree that it would be if a human were responding to a particular prompt. There is a fine line to walk between a prompt that is too broad and one that is too specific—that is, between a prompt that leads to responses that are not useful and a prompt that leads to an answer that is just right.

Advanced Techniques in Prompt Engineering

Beyond the basics, there are advanced techniques that can further refine the process of prompt engineering, making LLMs even more effective.

Chain-of-Thought Prompting

The act of prompting using a chain of thought is about leading an artificial intelligence model in a specific direction and in a specific manner toward the answer one wants. This is particularly useful with “hard” or “difficult” problems and when one is dealing with a model that has, more or less, a “global” nature when it comes to its operation. By breaking down a problem into smaller, more manageable parts, both the prompt designer and the model can achieve clearer paths to the kinds of signals one wants to see emitted by the model. Adding to this narrative, a young Sam Altman once said, “When you have a really hard problem to solve, think smaller.”

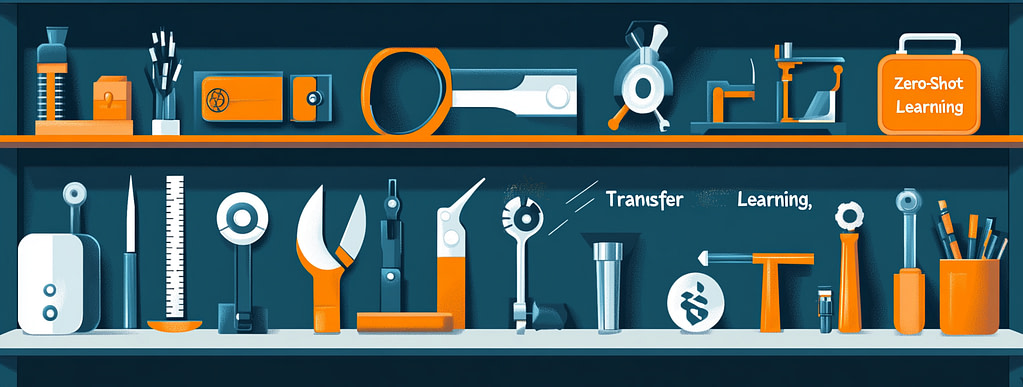

Zero-Shot and Few-Shot Learning

Zero-shot and few-shot learning are techniques that enable models to perform tasks without or with minimal training data. In prompt engineering, these approaches can be used to elicit sophisticated behaviors from LLMs by carefully designing prompts that leverage the model’s pre-existing knowledge.

This is especially valuable when dealing with niche topics or tasks for which there is limited training data available.

Enhancing Prompt Engineering with Transfer Learning

Transfer learning is a powerful technique that can enhance prompt engineering by leveraging knowledge from one task to improve performance on another. In the context of LLMs, transfer learning allows developers to fine-tune models on specific prompts or tasks, benefiting from the pre-existing knowledge encoded in the model.

By transferring knowledge from a source task to a target task, developers can expedite the training process and improve the model’s performance on new tasks. This approach is particularly useful when working with limited data or when aiming to achieve high performance on a specific task.

Benefits of Transfer Learning in Prompt Engineering

Transfer learning offers several benefits in the realm of prompt engineering. Firstly, it reduces the need for extensive training data, as the model can leverage knowledge from previous tasks. This is especially advantageous when working with specialized domains or niche topics where data availability is limited.

Additionally, transfer learning can accelerate the fine-tuning process, allowing developers to quickly adapt LLMs to new tasks or prompts. This agility is crucial in dynamic environments where prompt requirements may change frequently.

Implementation of Transfer Learning in Prompt Engineering

Implementing transfer learning in prompt engineering involves selecting a suitable pre-trained model as the source, identifying the target task or prompt, and fine-tuning the model’s parameters to optimize performance. This process requires careful consideration of the similarities between the source and target tasks, as well as the specific nuances of the prompt being engineered.

By strategically applying transfer learning techniques, developers can enhance the effectiveness of prompt engineering, leading to improved LLM performance and more accurate outputs.

Conclusion

Prompt engineering is a crucial aspect of working with LLMs, offering a pathway to harnessing their full potential. By understanding the principles of effective prompt design, recognizing the challenges, and exploring advanced techniques such as transfer learning, developers and researchers can optimize LLMs for a wide range of applications. As the field of AI continues to evolve, so too will the strategies for prompt engineering, promising even greater capabilities and innovations in the future.