Designing Agentic AI Systems, Part 4: Data Retrieval and Agentic RAG

Up to this point, we’ve covered agentic system architecture, how to organize your system into sub-agents and to build uniform mechanisms to standardize communication.

Today we’ll turn our attention to the tool layer and one of the most important aspects of agentic system design you’ll need to consider: data retrieval.

Data Retrieval and Agentic RAG

It’s possible to create agentic systems that don’t require data retrieval. That is because some tasks can be accomplished using just the knowledge your language model was trained on.

For example, you could likely create an effective agent to write a book about historical events like the Roman Empire or the American Civil War.

For most agentic systems, providing access to data is a key aspect of system design. As such, there are several design principles that we need to consider around this functional area.

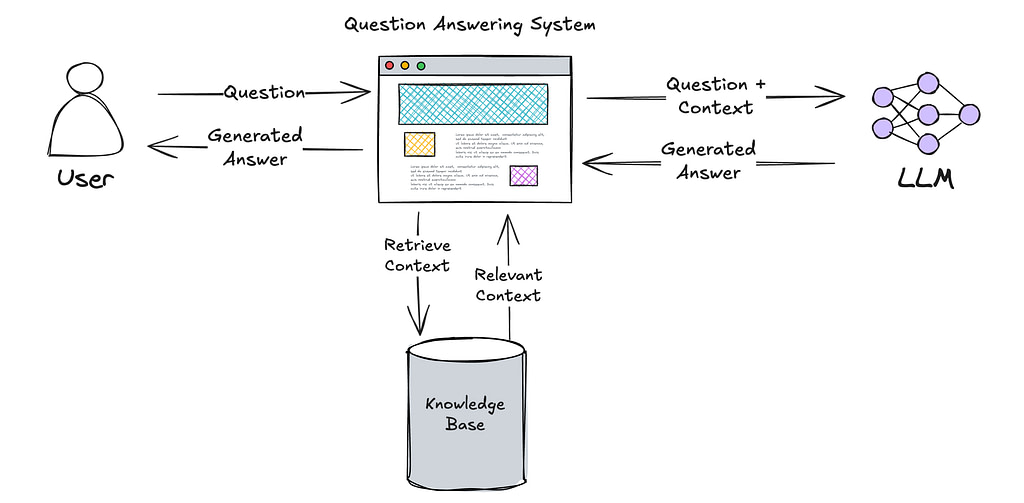

Retrieval Augmented Generation (RAG) has emerged as the de facto standard technique to connect LLMs to the data it needs to generate responses. As the name suggests, the general pattern for implementing RAG involves three steps:

Retrieval – Additional context is retrieved from an external knowledge base. In this context, I’m using knowledge base is a loosely defined term which could include API calls, SQL queries, vector search queries, or any other mechanism used to find relevant context to provide the LLM.

Augmentation – The user’s input is augmented with the relevant context obtained in the retrieval step.

Generation – The LLM uses its pretrained knowledge along with this augmented context to generate a more accurate response, even about topics and content it was never trained on.

Using RAG in a question answering system would therefore look something like this:

Agentic systems will almost always require some implementation of RAG to perform their responsibilities. However, when designing agentic systems it’s important to consider how different types of data can impact the requirements of the system as a whole.

Structured Data and APIs

Companies who have developed mature API programs will find their path to value much easier to navigate than those without. That’s because much of the data required to power agentic systems is the same data that’s being used to power non-agentic systems today.

An insurance company that’s built APIs to generate insurance quotes can plug that API into an agent much easier than one still trapped in the client/server era.

In fact, agentic systems are poised to disrupt almost every aspect of the insurance business. From quoting, to underwriting, from actuarial science (the oddsmakers of the insurance business), to claims management, agentic AI is likely to take over these roles completely in the next 2-3 years. But only if the agentic systems have access to the right data and capabilities.

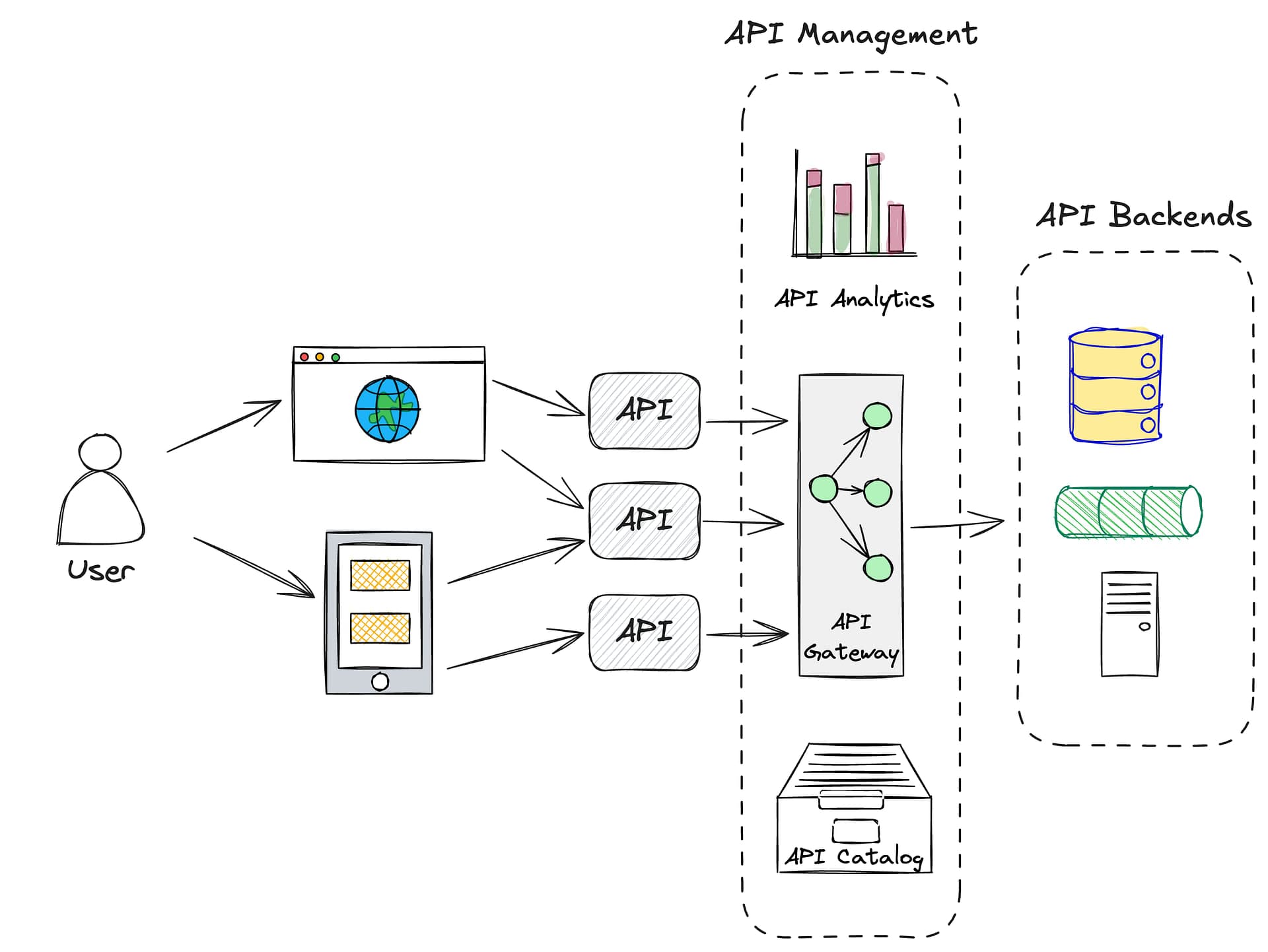

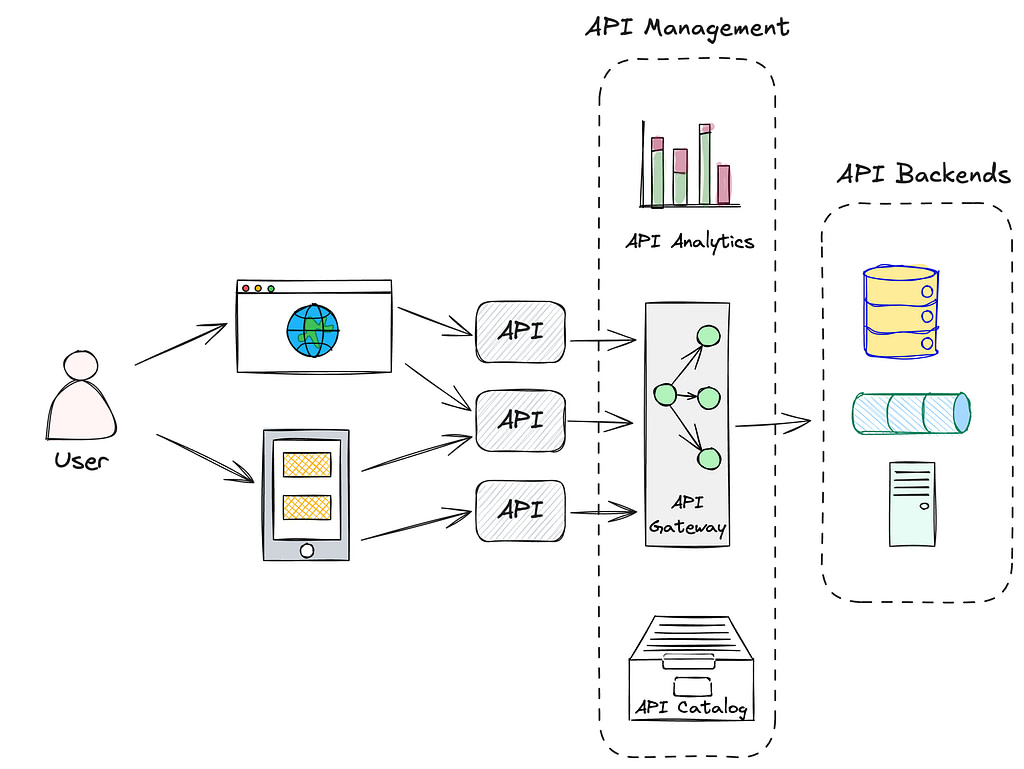

AI-First API Management

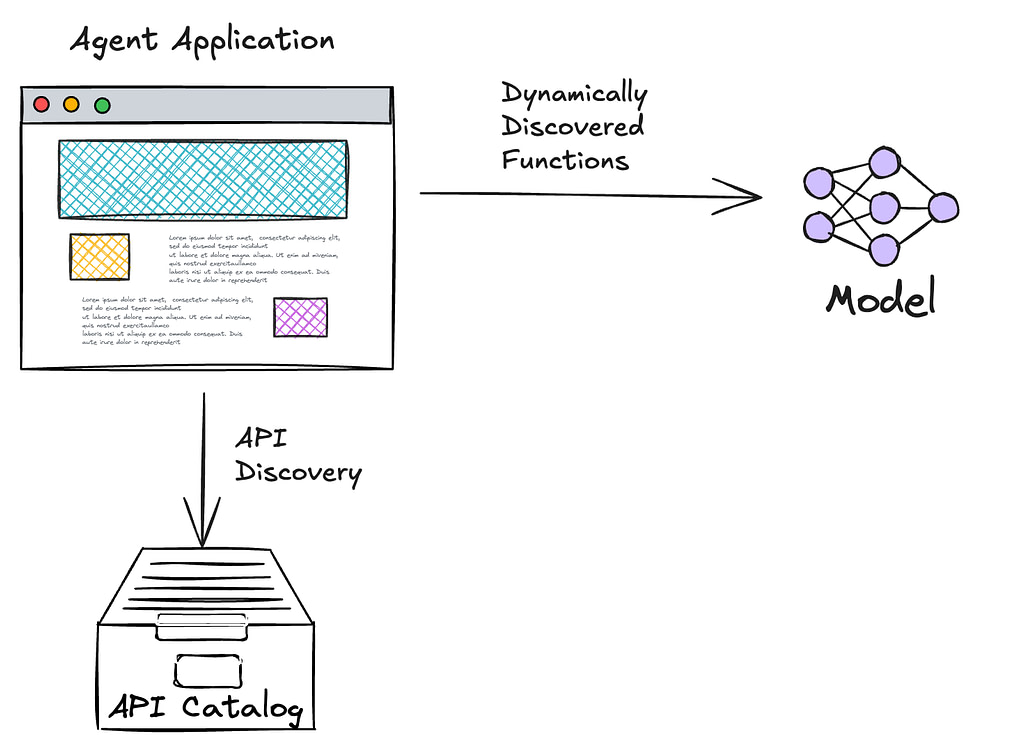

Many companies equate API management with having an API gateway. However, in the world of agentic systems, OpenAPI (Swagger) becomes a key enabler to consuming existing APIs by agentic systems. That’s because the JSON schema used in OpenAPI can be easily converted into the function definition structure used by many LLM providers.

Additionally, service discovery mechanisms that allow agentic systems to lookup and discover APIs present interesting opportunity for agentic systems to create emergent capabilities on their own. Depending on your perspective, this is either an exciting or terrifying prospect.

With the proper guardrails in place, exposing an API catalog search could even be exposed as an available function to the LLM to allow it to lookup additional capabilities that could be used to solve the current task.

Unstructured Data and RAG

While most companies have mature practices to manage operational, analytical, and other types of structured data, unstructured data management usually lags far behind.

Documents, emails, PDFs, images, customer service transcripts, and other free-form text sources can be incredibly valuable for agentic systems—but only if you can retrieve the right information, at the right time, in the right format. That’s where retrieval augmented generation becomes particularly powerful.

Why Unstructured Data Is Challenging

Unstructured data tends to be larger in scope and more heterogeneous in format (PDFs, images, raw text, HTML, etc.). This variability can overwhelm naive approaches to data retrieval.

Unlike structured data stored in relational tables, unstructured data has no enforced schema. You can’t simply run a SQL query on a PDF, or do a direct “look up” in a spreadsheet.

This has led to the rapid adoption of new technologies to solve these challenges.

Vector Databases and Semantic Search

Modern vector databases and semantic search techniques have paved the way for unstructured data retrieval at scale. These systems convert text into high-dimensional vector embeddings, allowing for similarity-based lookups. A user or agent query is also converted into a vector, and the closest vectors in the database (i.e., the most contextually similar text chunks) are retrieved.

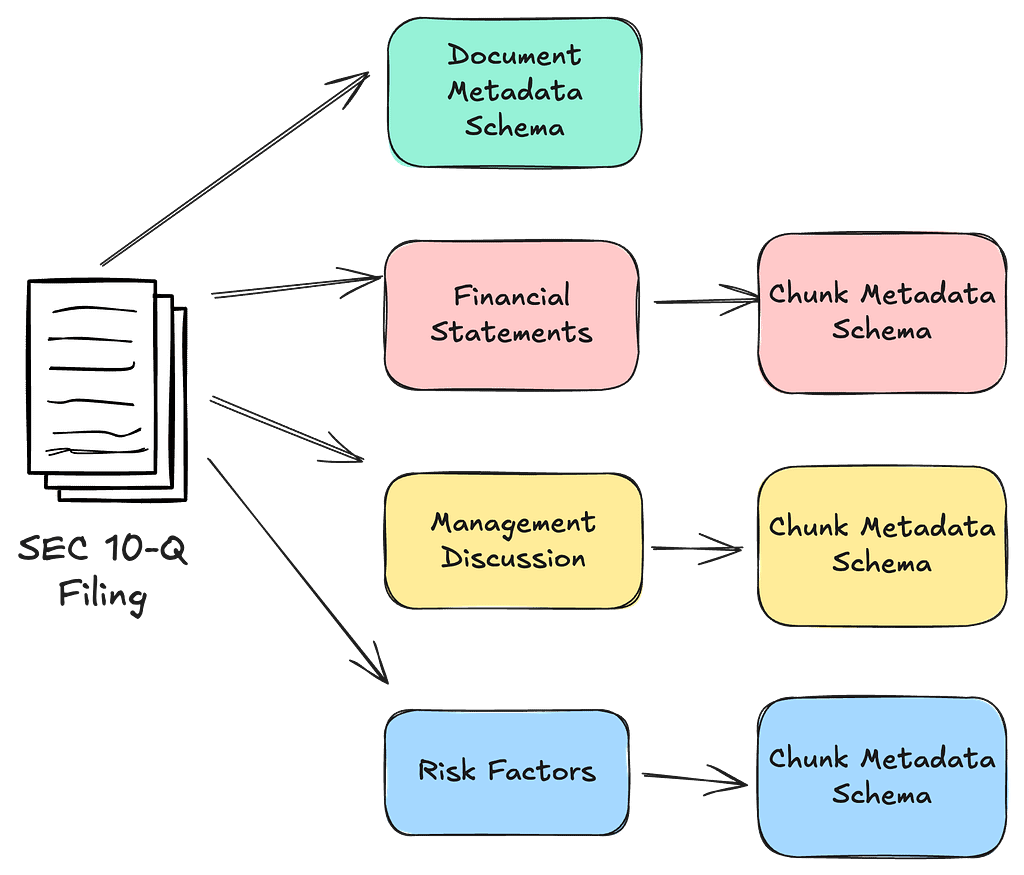

Large documents are split into smaller “chunks,” each separately indexed. This allows your retriever to retrieve only the relevant chunk(s), rather than stuffing the entire document into the prompt.

Many vector DBs let you attach metadata (e.g., author, date, document type) to each chunk. This metadata can guide downstream logic—for example, retrieving only the latest product manuals or knowledge base articles relevant to the user’s issue.

In high-traffic scenarios or where data quality is critical (e.g., legal or medical use cases), you can apply additional filters and ranking criteria after the semantic search to ensure only top-quality or domain-approved content is returned.

RAG Pipelines for Unstructured Data Processing

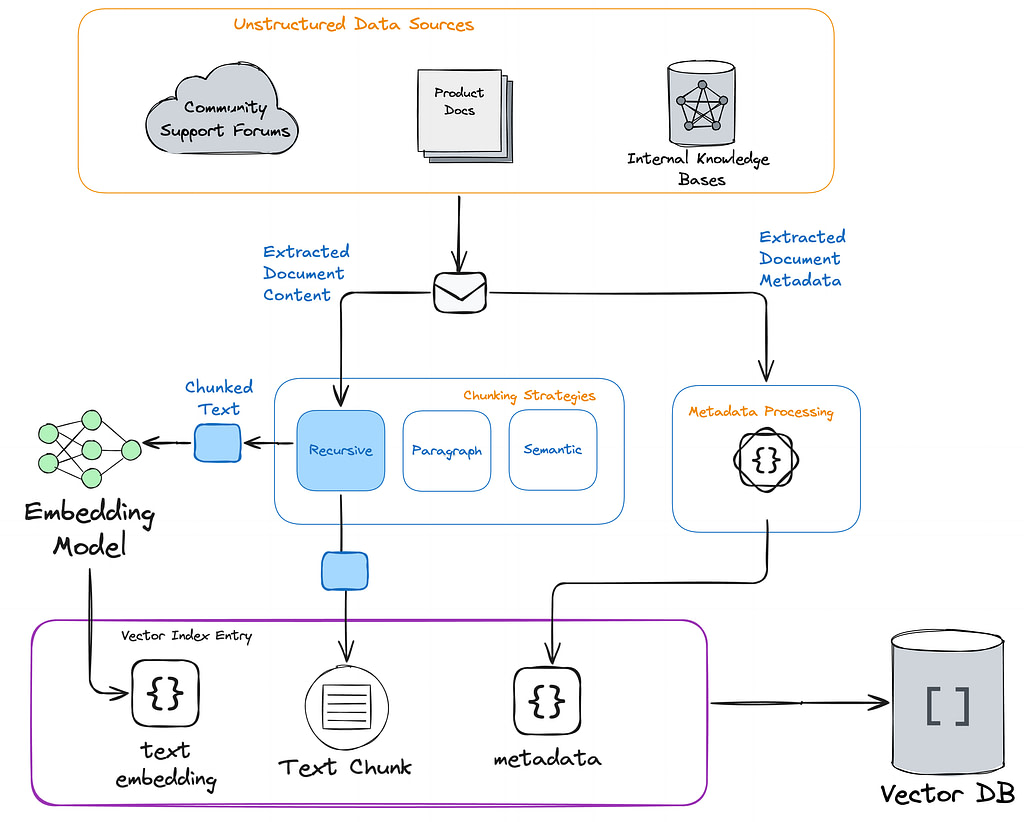

RAG pipelines serve as the connective tissue between unstructured data sources and the vector database. They typically include several steps, such as extraction, chunking, and embedding, to transform messy or free-form documents into an optimized search index that can provide your LLM with relevant context.

In the image below, you can see how data flows from various unstructured data sources. These could include things like knowledge bases, documents in file systems, web pages, content in SaaS platforms along with many others.

Inside a RAG pipeline, unstructured data undergoes a series of transformations. These include extraction, chunking, metadata processing, and embedding (vector) generation, before being written to a vector index. These pipelines provide the critical function of surfacing the most relevant pieces of information when your LLM needs to answer a question or complete a task.

Because unstructured data often lives in disparate silos, keeping it synchronized and up to date can be a challenge. In large enterprises, some unstructured data sources are changing constantly, meaning that the RAG pipeline must capture and process these changes with minimal delay.

Stale embeddings or outdated text chunks can lead to inaccurate or misleading answers from your AI system, especially if users are seeking the latest data on product updates, policy changes, or market trends. Ensuring your pipeline is equipped to handle near real-time updates—whether it’s through event-driven triggers, scheduled crawls, or streaming data feeds—significantly boosts the quality and trustworthiness of the system’s outputs.

Optimizing RAG Performance

Developers who are familiar with data engineering practices to build data pipelines are often unprepared for the nondeterministic nature of vector data.

With a traditional data pipeline we have well known data representations of what our data should look like at the source system and at the destination system. For example, a set of relational tables in PostgreSQL might need to be transformed into a single flattened structure in BigQuery. We can write tests that tell us whether or not data from our source system was correctly transformed into the representation we want in our destination system.

When dealing with unstructured data and vector indexes, neither the source nor the destination is well known. Web pages and documents contain

What’s Next?

In the fifth and final part of this series, we’ll look at cross cutting concerns. We’ll dig into areas such as security, observability, tool isolation and a few other important things that agentic system designers need to consider.