Top 5 Gen AI technologies leading businesses are adopting right now

The accidental architecture. We’ve all seen it. A project comes down from the top. Urgent priority. Needs to be done yesterday. So we perform the heroics, ship the project, and celebrate the victory.

Then we start building on top of that initial architecture. We didn’t have time for long term visions. As a result, things don’t quite fit together the way we’d like. We bolt-on solutions, we hack together mismatched parts. Before long, we’re staring down at a mountain of technical debt that makes every subsequent project riskier, slower to build, and less stable.

Most organizations are at a fork in the road with their generative AI stack. They’ve launched pilots. They’re starting to see benefits. They know that the majority of gen AI project work is still ahead of them. If they keep plowing ahead, they know their architecture will turn into a house of cards.

Smart enterprise technology leaders are planning for the future. They’re taking a strategic approach to bring in the key capabilities they need to deliver AI projects at scale. For these companies, each project ships faster, and they are building a tech stack to create lasting, competitive differentiation through AI.

Surveying the current enterprise landscape

Most enterprises are in the piloting stage of generative AI adoption. According to Deloitte, around two thirds of the enterprises they included in their research have successfully launched some generative AI initiative and are planning to increase investment going into 2025.

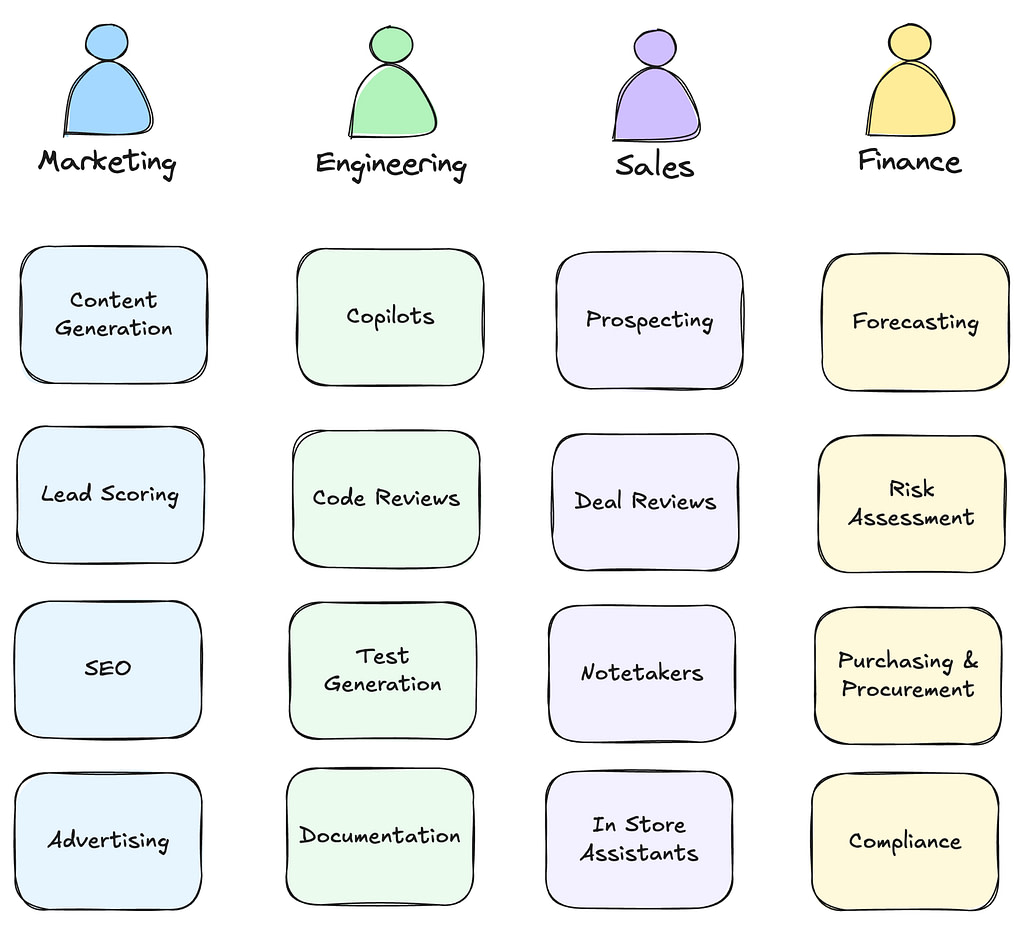

Most common generative AI pilot projects

Tool adoption is one of the most common approaches for enterprises who have dipped their toes in the water.

These include role-specific capabilities like GitHub copilot or Cursor which helps to accelerate software development. AI notetakers also have improved communication and workflow for managers who want to synthesize the outcomes of meetings and necessary next actions. Sales automation, content automation, and many others are among the popular tools that businesses have experimented with to streamline their operations.

General purpose tools like ChatGPT or Anthropic’s Claude are also wildly popular. These tools have given everyone from marketers to software engineers to executive assistants a flexible way use AI to accomplish their everyday tasks faster.

But these pilots have a major problem that many enterprises haven’t yet recognized.

Your data is your differentiator

Every successful enterprise has a set of core business processes they need to own in their entirety. Retailers live or die by their supply chain management – having the right products in stock when customers want them is essential to success. Insurance companies must excel at assessing risk and processing claims efficiently, as these capabilities directly impact their profitability and customer satisfaction. Oil and gas companies depend on their expertise in exploration and extraction, where small improvements can generate billions in additional revenue.

Adopting off-the-shelf solutions, giving those solutions access to their highly protected, proprietary data, and outsourcing these processes is a nonstarter. Innovative enterprises are preparing for the transformation nature of generative AI. They recognize that they need to develop their in-house capabilities and they need to do it now.

Here’s how those companies are doing it.

Top 5 gen AI technologies that companies are adopting now

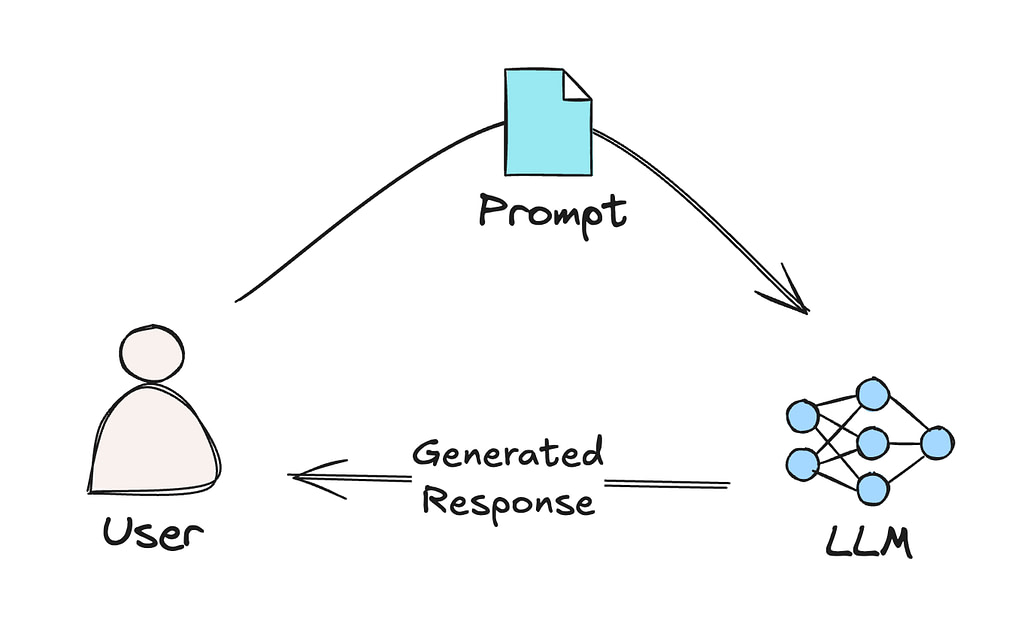

#1 – Vector Databases

Large language models (LLMs) are great, but they don’t know about your data. This means we need a way to bridge the gap to connect your proprietary data to your LLM.

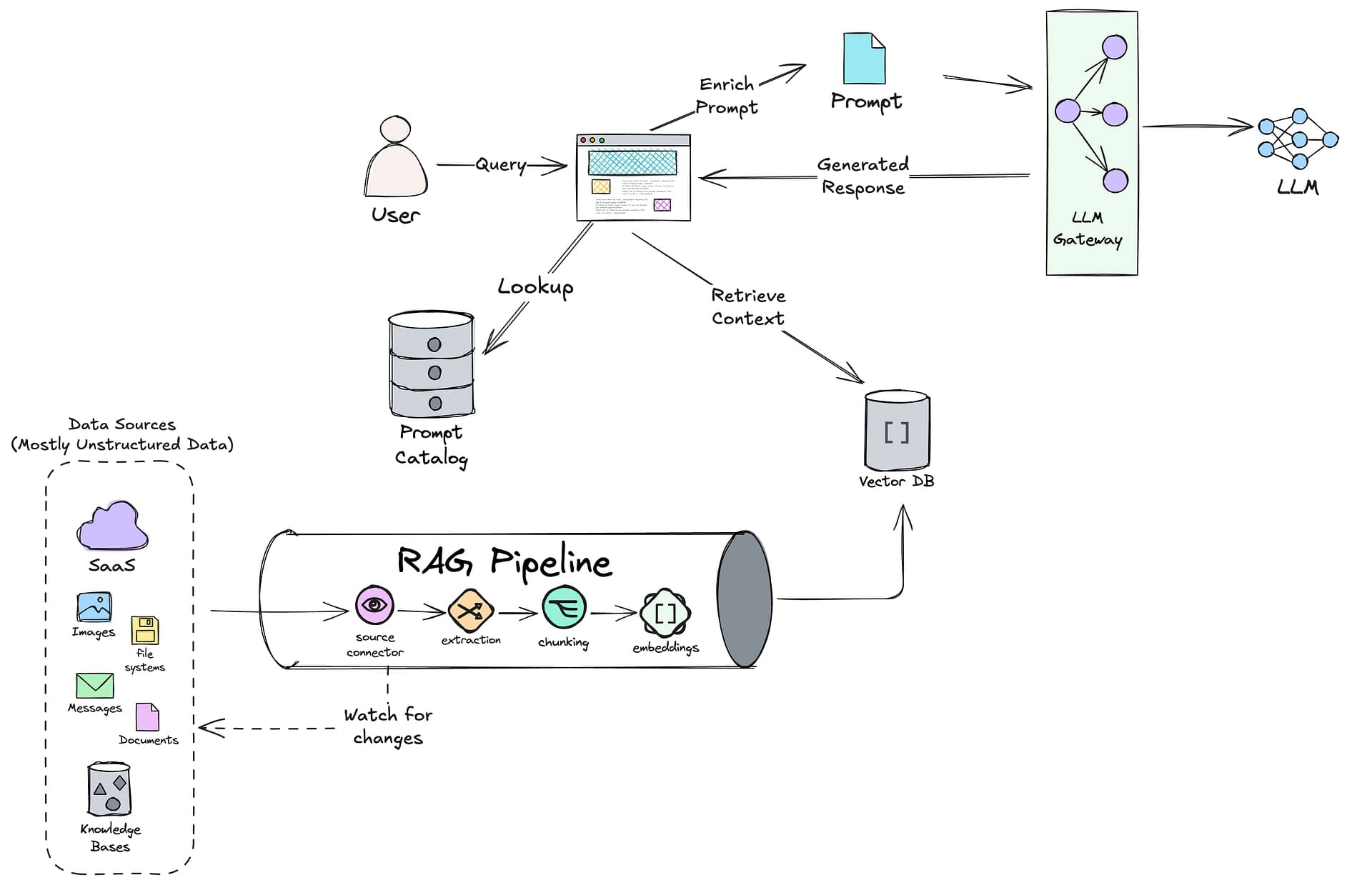

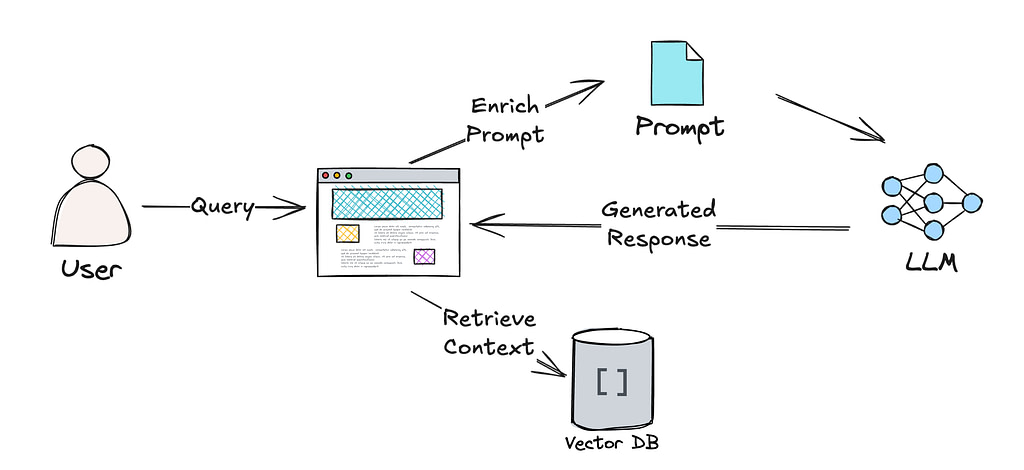

The standard approach for accomplishing this is with Retrieval Augmented Generation (RAG).

Much of the data that LLMs rely on is unstructured data. For most enterprises, this is one of the most underdeveloped areas in their data capabilities. Vector databases provide a way to easily search through unstructured data to find relevant content which can be provided to the large language model.

One of the most important technologies in a generative AI tech stack is a vector database.

#2 – RAG Pipelines & Unstructured Data ETL

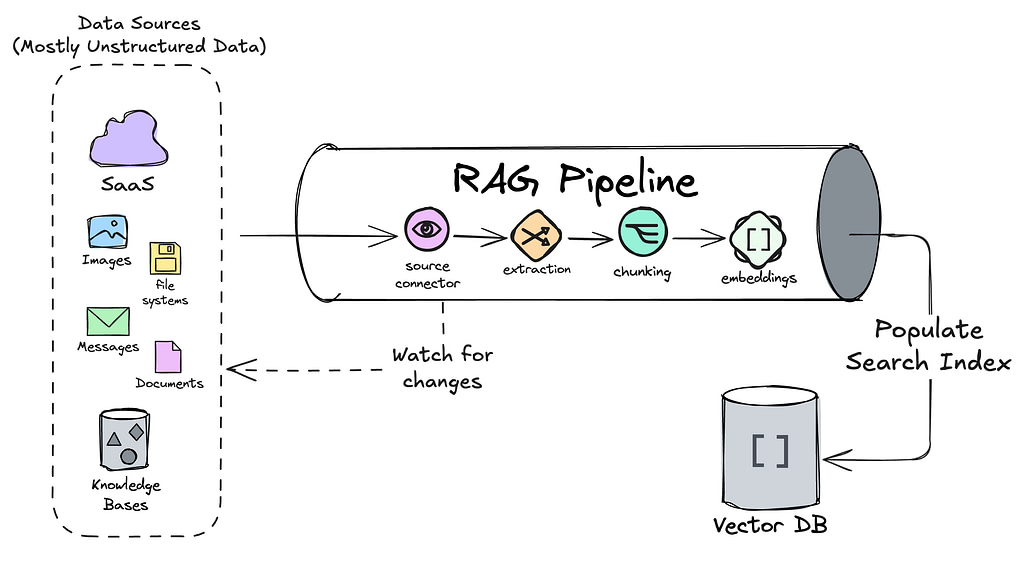

In order to transform your unstructured data into vector data, you need a considerable amount of preprocessing and data transformations.

Unstructured data can live in every corner of your organization. From documents in file systems like Google Drive, Share Point, and One Drive to meeting notes in SaaS platforms like SalesForce, most existing data integration and ETL platforms aren’t capable of populating vector databases. This is because most of those platforms were made for structured data, pushing tabular data from one database into structured formats in downstream databases.

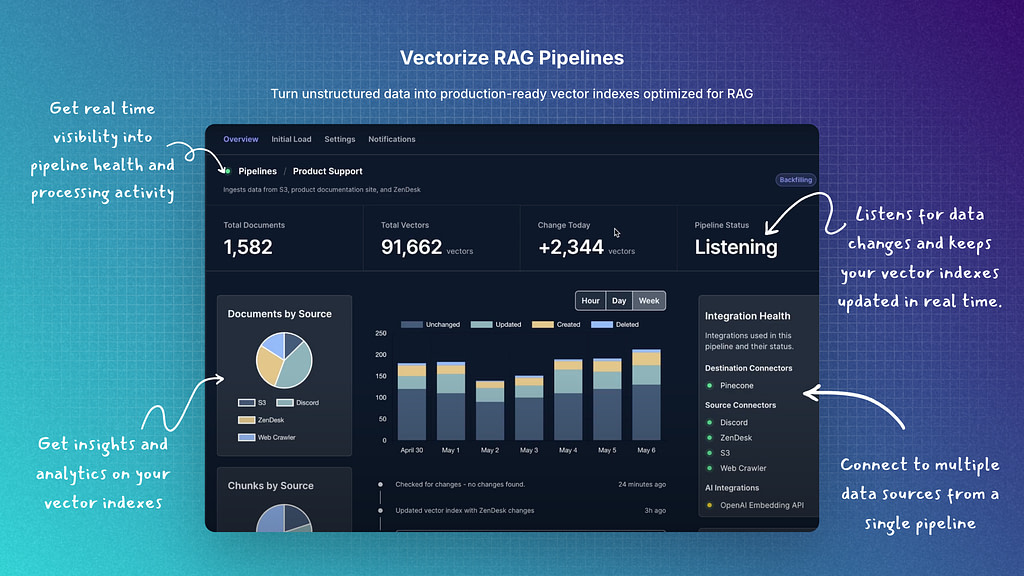

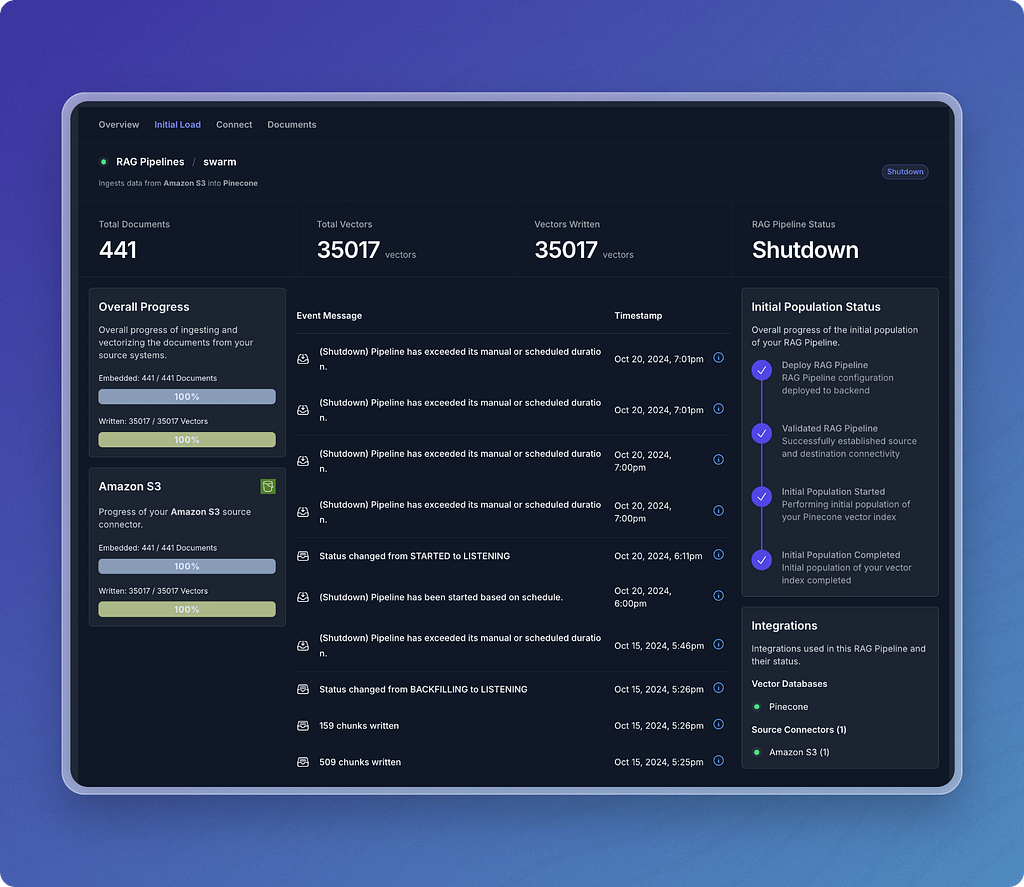

Likewise, vector search indexes will become outdated and return incorrect information without a strategy to keep the data fresh. This is where a solution like Vectorize becomes essential.

A huge portion of the time it takes to deliver a generative AI solution goes into data wrangling and transforming unstructured data into vector search indexes. Having a purpose built platform to solve these requirements is essential for any business that values accurate LLM outputs and fast project delivery.

#3 – AI Agent Frameworks

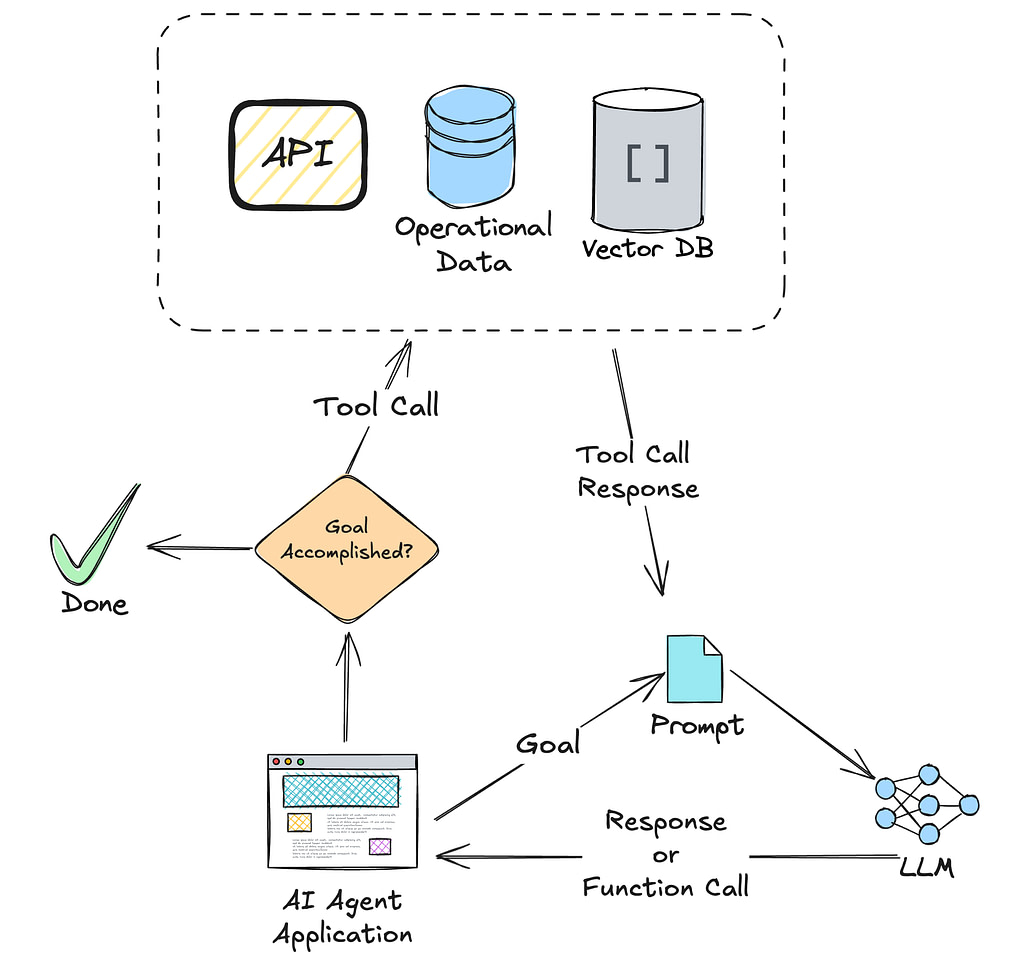

As AI become integrated into core business processes, the complexity of processing surges. Agentic approaches are becoming the go-to approach for businesses to achieve accurate automation across broader scopes of their business.

While many frameworks exist, enterprises are spending a considerable amount of time to find options that give them the same production-readiness they have in other areas of their software development competencies.

Low-code options are generally not built with mission-critical use cases in mind. Open source frameworks aren’t built for enterprises who have adopted dev-ops strategies and are leveraging distributed architectures for the flexibility they bring.

#4 – Prompt Catalogs

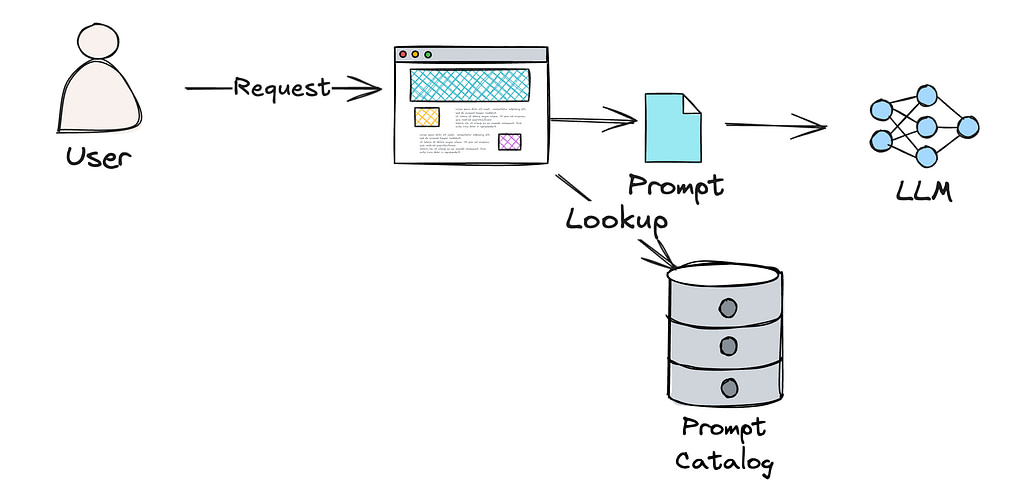

As AI agents become more common, enterprises often want to achieve the same level of reuse across agents they have have in other parts of their architecture.

This often means identifying the best performing prompts for a given use case and inserting that prompt all the places where it’s being used. For this reason Prompt Management and Prompt Catalogs are showing up in more companies’ technology strategies.

Prompt management can eliminate the trial and error that typically goes into assessing the effectiveness of a given prompt. Searching for existing prompts, versioning prompts, trying different iterations in a controlled way are all useful ways to help companies incrementally improve the performance of their AI applications.

#5 – LLM or Generative AI Gateways

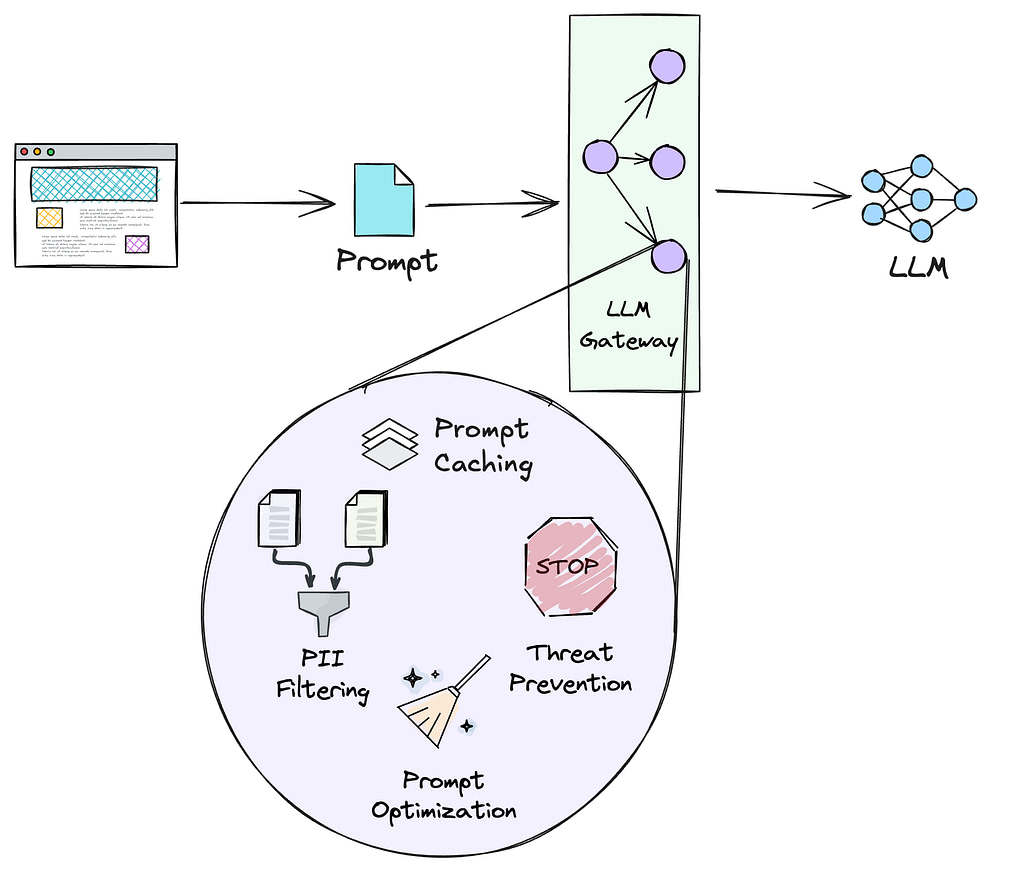

Generative AI has introduced new requirements and opened up new attack surfaces that companies need to consider. This has led to an increased interest in LLM gateways (sometimes called generative AI gateways).

LLM gateways behave in a similar way to an API gateway. They sit between the application and the LLM and can address cross cutting requirements centrally instead of inside each individual application. These requirements include things like prompt caching, where LLM responses for the same request are served from cache instead of going to the LLM every time.

They also include security features such as jailbreaking attacks which use specific prompt instructions to bypass safety protocols. They can also help improve results by optimizing the language used in the prompt and filtering out irrelevant information which can lead to the LLM getting confused.

Building a Gen AI platform? Get your data right first.

Data is the foundation that the rest of your generative AI platform is built on. If you don’t have the right data capabilities in place, you’ll find your company falling behind competitors, struggling to ship projects, and achieving subpar results from the solutions you do manage to ship.

Here’s how Vectorize can solve your data integration challenges while your competitors are still figuring out where to start.

A data-driven approach to achieving better results

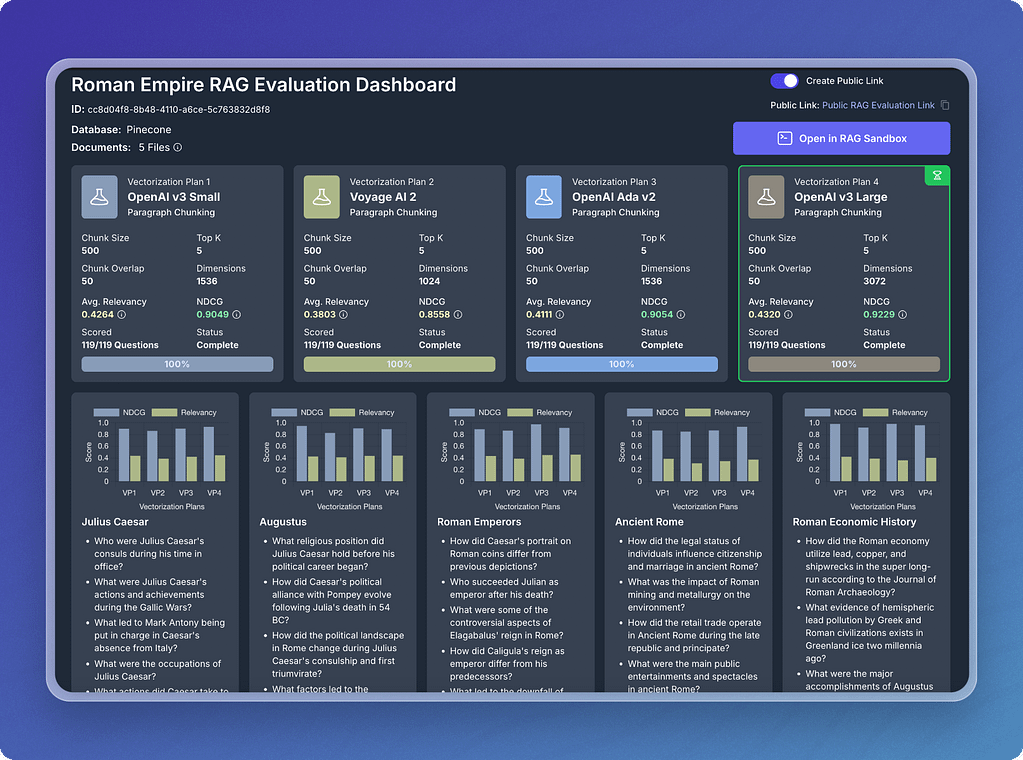

If you want your LLM to have the best, most relevant context, pushing your data into a vector database is not enough. You need to know exactly how to prepare your data and build your vector search indexes to get back the most relevant, accurate information.

Vectorize gives you an advanced RAG evaluation engine to make this process simple. With Vectorize, you can identify the exact extraction, chunking, and embedding model strategies that will deliver the best context to your LLM.

This means more accurate responses for your users without laborious analysis efforts to optimize your search indexes for useful results.

Deliver AI agents and RAG applications 5x faster

Most of the time required to build a generative AI application is spent on data preparation and optimization. If your LLM doesn’t have reliable access to the right data, everything else falls apart.

With Vectorize RAG pipelines, you have a production ready solution to ensure your LLM will always get back the most relevant, up to date data it needs to respond to user queries.

Vectorize is able to ingest data from a wide variety of sources. When data changes, your vector indexes are updated automatically. This means your users never get responses that reflect outdated information.

See how Vectorize can help your business

If AI is important to your business, let us show you how Vectorize can solve the most time consuming data challenges you’ll face building out your generative AI platform.

To see Vectorize in action, click here to schedule a demo now.