The Ultimate Guide To Vector Database Success In AI

Introduction

Vector databases have exploded in popularity over the past several years. At first, this popularity was driven by predictive AI use cases like recommendation engines and fraud detection. But increasingly, this adoption is all coming from generative AI.

What is vector data?

Let’s say that we decide to launch a very useless version of Netflix where we only offer 5 movies and we only have 5 users for our service. To decide what movies to recommend to each user, we might start by looking at movies each user has watched all the way through:

| ET | The Godfather | The Matrix | Star Wars | Indiana Jones | |

| Mike | ✔️ | ✔️ | |||

| Diane | ✔️ | ✔️ | |||

| Melissa | ✔️ | ✔️ | |||

| Bob | ✔️ | ✔️ | ✔️ | ||

| Ruth | ✔️ | ✔️ | ✔️ |

Then to decide which movies to recommend for a given user, we might look at the movies each user has watched to find similar users who share the same taste in movies. If there are no exact matches, then we move on to see which user is closest. For example here, Mike and Bob both watched The Godfather and Star Wars, but Bob also watched ET, so that might be a good recommendation for Mike.

This example is trivial, but imagine that we were performing this exercise with 500k movies or 50M users. Now this problem becomes much more challenging and where high-dimensional vector data is an important tool to solve these problems.

One possible vector representation would be to represent every cell in our table as a 1 or a 0, using a machine learning technique called one hot encoding:

Mike: [0,1,0,1,0]

Diane: [1,0,0,0,1]

Melissa: [1,1,0,0,0]

Bob: [1,1,0,1,0]

Ruth: [0,1,0,0,1]If you ever studied calculus and/or linear algebra, you may be thinking that we could probably do matrix operations on this data to yield some interesting results or compare high-dimensional vectors using techniques like dot products or cosine similarity and you would be exactly correct! As we’ll see later in this post, these techniques are widely used in vector databases!

Vector embedding models and the explosive growth of vector data

While use cases like recommendation engines were certainly valuable, they aren’t responsible for the massive adoption of vector database capabilities that we’ve seen since the launch of ChatGPT. As more developers and companies started exploring ways to use large language models (LLMs), they often encountered limitations of pre-trained foundational model like GPT. These models didn’t have access to the necessary internal information required to answer questions for customers or match the tone and style that a company’s marketing department had built up over the years.

As a result, companies went looking for the most effective way to provide an LLM with relevant context. What they usually found is a certain type of machine learning model that was created to handle exactly this type of problem: text embeddings.

A text embedding model provides a way to create mathematical representations of the semantic meaning of a string of text as an array of floating point numbers. This unstructured data could be used to identify the most similar vectors which in turn would give you the piece of relevant text which has opened up the door for the explosion of generative AI applications we’re now seeing today.

Other uses of vectors

While embeddings are an important use case, it’s important to remember that they are not the only application for vectors and that applications such as computer vision, image recognition, object detection, and classification tasks also benefit from vector representations.

What exactly is a Vector Database?

Now that we understand what vector data is and why it’s grown so much in popularity and importance, we can now consider the capabilities needed to store and query this type of data effectively.

At its core, a vector database is essentially a specialized type of search engine. Like almost all search engines (and traditional databases), it relies heavily on search indexes to facilitate this capability. Typically this process starts with a user providing a query vector, and requesting that the database perform an approximate nearest neighbor (ANN) search.

Just like our movie example above, an ANN search will retrieve the most similar vectors to the query vector supplied by the user. Because it’s common that the user needs more than just one vector retrieved, you’ll often see people refer to this as a k-ANN search where k is the number of nearest neighbors to return.

So two very important capabilities of a vector database are to efficiently store these data points so they can be searched, and to provide efficient vector search for all the vectors stored in search indexes within the database.

However, if the only data stored in a vector database was the vectors themselves, it wouldn’t be very useful as arrays of numbers aren’t particularly meaningful on their own. This is why there also needs to be capabilities to manage and access the metadata associated with each vector. This could be the chunk of text that was used to generate the vector along with all the data points you might want to retrieve as well, such as the document where the context was sourced from.

When combined with either generative AI models or more traditional machine learning models, a vector database becomes an immensely useful tool that provides capabilities often unavailable from a more traditional database.

Vector Databases vs. Relational Databases

Increasingly, this is becoming a false dichotomy as many databases like PostgreSQL add support for vector search with add-ons like pgVector. However, there are definitely architectural differences between general purpose databases and those purpose-built around the needs of this specialized vector-oriented data type.

The first core difference you’ll encounter as a developer is around data modeling. With specialized vector databases like Pinecone, the primary data type you’ll work with is a vector index. Alongside the vector index, you’ll typically have a metadata index which is used to manage the metadata associated with each vector.

Your data modeling for vector databases typically revolves around a fundamental decision of how many dimensions your vectors will have, which will be determined by the embedding model you use for generative AI use cases. You’ll also need to consider the metadata filtering and retrieval that you’ll need to support your use cases.

The query results you’ll receive from these systems will differ as well. A vector database will typically return a similarity measure such as the dot product, cosine similarity, or euclidean distance between the input vector and the nearest neighbors along with any requested metadata for the returned data points. The interface for vector databases is often a REST API or a Python/TypeScript library wrapper on top of that API.

Likewise, many older databases that are adding vector support were built for the J2EE era and rely on binary protocols with limited support for more modern languages. For this reasons, many AI engineers and app developers find themselves reaching for purpose built vector databases for greenfield applications while those incorporating generative AI capabilities into established applications reach for their existing database with a bolt-on option.

How Vector Databases Work

How Do Vector Databases Store Data?

Vector databases store data by using vector embeddings. Vector databases excel at managing high-dimensional vectors, typically by storing data on multiple nodes, often relying on sharding and/or partitioning techniques to provide scalable search across large volumes of vectors. This capability to store and index high-dimensional vector data efficiently sets vector databases apart from traditional databases.

Indexing these vectors, a process critical for performing efficient similarity searches, utilizes specialized structures optimized for Approximate Nearest Neighbor (ANN) search. This allows a vector database to quickly perform many vector similarity search requests against the underlying structures of the indexes. This augments metadata storage and allows vector databases to manage and search through massive datasets, a task that is difficult for traditional database systems.

Key Features of Vector Databases

A vector database excels at managing both high dimensional vectors and vector data within a multidimensional vector space, setting it apart from traditional relational databases. It is optimized for storing and indexing vector embeddings, essential components that encapsulate data’s essence, ranging from both numerical data and graph data to sensor data. This optimization allows LLMs to efficiently process and retrieve vectors and “understand” complex information.

Fast and Accurate Similarity Search

One of the key capabilities of a vector database is its ability to conduct efficient similarity searches through vector space, using similarity measures like cosine similarity. This functionality of vector search is crucial for applications such as semantic information retrieval and anomaly detection, aiming to find items or patterns similar to a given query in vector space. The precision and speed of these searches are enhanced by advanced indexing techniques, such as Approximate Nearest Neighbor (ANN) search, enabling quick retrieval of large vectors and facilitating approximate nearest neighbor search searches.

Significance of Similarity Search in AI

Vector search, or similarity search, enabled by a vector database, permits AI systems to precisely identify the nearest neighbors in a dataset based on complex similarity measures. This approach, based on vector embeddings, exceeds the capabilities of traditional databases by utilizing the vector database’s ability to manage vector embeddings and perform similarity searches across extensive, vector datasets. The use of vector embeddings and embedding models increases the relevance and accuracy of search results, crucial for tasks like image recognition, content recommendation, machine learning, and computer vision.

Handling Large Volumes of Vector Data

Most vector databases have an architecture that enables it to manage large data volumes within a multi-dimensional space efficiently. It employs data structures optimized for the nearest neighbor search and efficient similarity search, supporting complex AI applications that require access to vast amounts of training data, including those involved in machine learning, deep learning, and AI vision.

Real-time Updates

The dynamic capability of a vector database to support real-time access control and updates, including the addition of new vector embeddings and adjustments of managing vector embeddings indexes without significant downtime or re-indexing, is crucial. This feature is particularly important for applications requiring access control and immediate data updates, such as dynamic pricing models, fraud detection systems, and real-time content personalization machine learning models.

The unique capabilities of a vector database, make it an indispensable tool for modern AI applications.They contribute to the advancement of machine learning technologies and are a must-have for most enterpises.

Advanced Algorithms and Techniques

Vector databases use advanced algorithms and techniques for efficient storage, indexing, and retrieval of vectors, with Approximate Nearest Neighbor (ANN) search methods being key.

Hierarchical Navigable Small World (HNSW)

The HNSW algorithm, notable for its efficiency, constructs a multi-layered graph to enable swift navigation through each vector index within a hierarchy. This key algorithm is one of the most important aspects to how vector databases work.

How a Vector Database Indexes Vectors

Vector databases adopt various strategies for indexing, such as distributing data across nodes for parallel processing and using hashing to reduce search spaces. Data structure techniques also help vector databases excel in optimizing storage and retrieval processes based on the target similarity metrics.

The machine learning models you use to generate text embeddings will dictate the number of dimensions per vector. Each dimension corresponds to a feature in the hidden state of the model. In practice, this means that larger vectors can represent more nuanced meaning in language, but with a trade off around search performance and the storage required to manage your indexed vectors on an ongoing basis.

Optimizing Query Vector Retrieval

Optimizing query vector retrieval involves strategies to ensure fast and relevant search results. Distributing the dataset, utilizing machine learning models for organization, and implementing quantization are methods used by vector databases to enhance the efficiency of retrieving query vectors, critical for maintaining the performance of AI applications as data volumes grow.

Applications and Use Cases

Enhancing Generative AI and Large Language Models

The integration of vector databases significantly boosts the performance of AI models, especially in generative AI and large language models. These vector databases provide enable the efficient handling and retrieval of high-dimensional data, which is crucial for training sophisticated AI systems.

By providing rapid access to relevant vector embeddings, they enhance the AI models’ ability to understand and generate human-like text, images, or other content.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is by far the single biggest driver for vector databases and represents a groundbreaking approach allowing developers to leverage unstructured data in generative AI use cases. RAG uses a query vector to fetch related chunks of unstructured data from a vector database, enriching the context available to a natural language processing model during the generation process. Retrieval augmented generation allows for the creation of more accurate, contextually rich, and nuanced outputs, demonstrating the critical role of efficient data retrieval in natural language processing tasks.

Semantic Search and Recommendations

In the realm of semantic search and recommendation systems, the ability for vector databases to conduct vector searches is key. By analyzing user queries and content through vector embeddings, these hybrid search and recommendation systems can identify and recommend items in ways far better than simple keyword matching and is a growing use case for vector databases.

The Future of Vector Database and Generative AI Technologies

Challenges and Opportunities

Managing high-dimensional data introduces both challenges and opportunities for AI innovation. When you bring in a purpose built vector database, it adds complexity to your overall architecture. You have one more potential point of failure to monitor, one more component to manage and another piece of infrastructure to budget for.

That said, vector databases offer considerable opportunities as well. Almost every organization will certainly have a growing number of use cases that rely on generative ai. Adopting a vector database early ensures you have one of the keep capabilities in your generative AI tech stack to support your roadmap for the coming years.

At the same time, as your application portfolio grows, you will almost certainly need to vectorize more and more data into your vector database. It is entirely possible that enterprises will seek ways to put their entire collective knowledge into a vector database so that it’s easily available as you incorporate generative AI models into existing and new applications.

As this happens, managing these vector indexes and applying governance to ensure they remain accurate and up to date becomes a bigger and bigger challenge (and one that Vectorize is here to solve for you!).

Best Practices and Tips

Pick the right embedding model

Your vector database performance and costs will largely be tied to the quantity of vectors and how many dimensions each of those vectors has, along with the amount of metadata you will be storing for each vector. Generally speaking, you will want to select your embedding model by using a data driven approach to determine which models give you the most relevant context along with an assessment of the price-performance tradeoffs you’ll make.

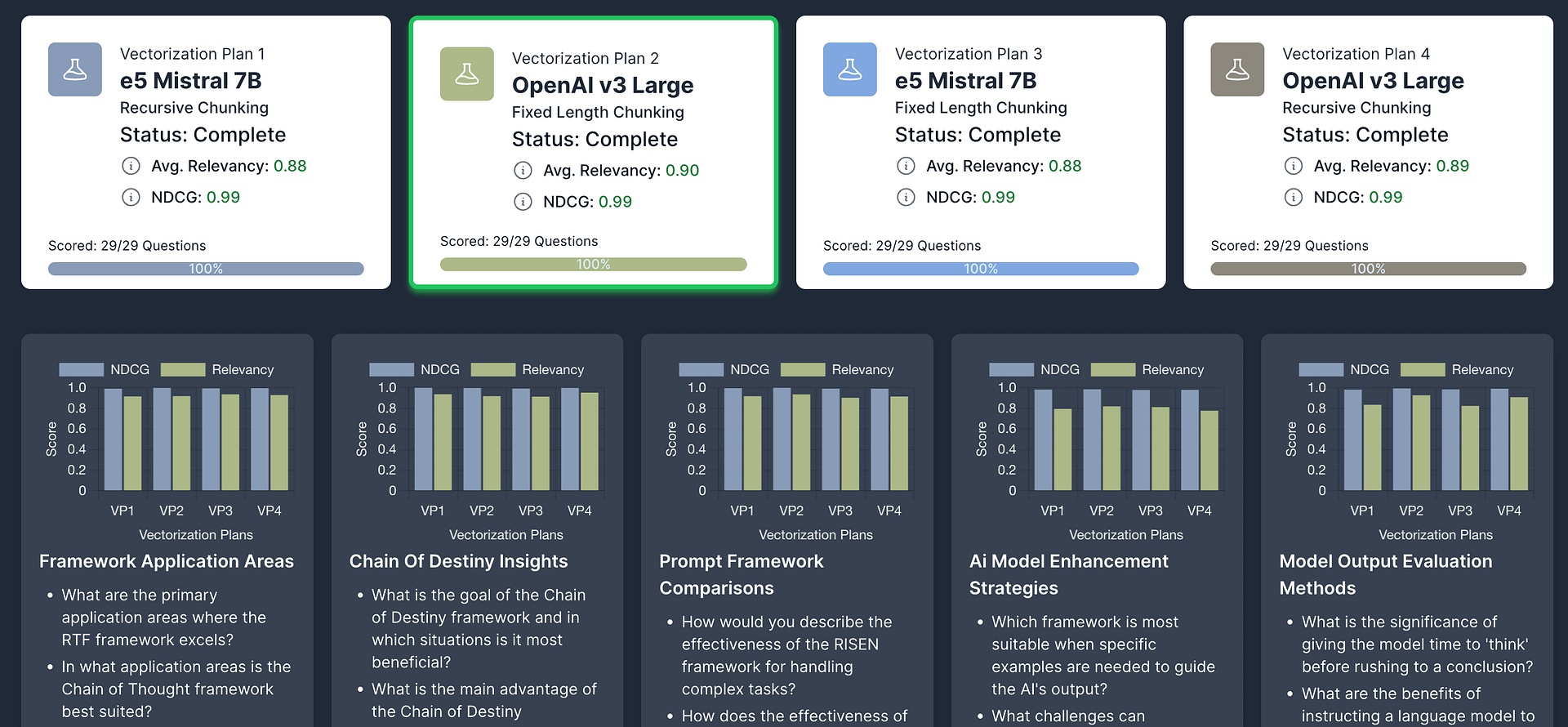

For example, we conducted the following experiment with a representative sample of data using the Vectorize experiment feature:

You can see from the average relevancy scores above, this corpus of documents about prompt engineering achieved slightly better results with the OpenAI text-embeddings-v3-large model vs the smaller model from Mistral, but just barely. However, the vectors from OpenAI are 3x larger than the Mistral embeddings and your costs to use the embedding API from OpenAI will result in considerable additional costs for a tiny improvement in relevancy.

Mitigate stale data and vector drift

One of the biggest challenges that developers and companies encounter when adopting vector databases is that their vector indexes slowly get out of sync as the source data from their knowledge repositories changes.

One of the advantages to using a platform like Vectorize is that your vector database automatically gets updated as soon as any change in the source data is detected, usually in real time with any systems that have a change event notification API.

Consider your data engineering capabilities

Most organizations typically have data engineering capabilities that revolve around structured data ETL. These tools are usually a poor fit for the unstructured data that you’ll primarily be ingesting into your vector database or vector store for use cases like retrieval augmented generation.

Data engineering for your vector database poses unique challenges as well. With traditional data engineering, your end state is well defined. However, with vector databases, you only know that your output should be a vector.

Vectorize gives you the ability to ingest data from any unstructured data source such as files, knowledge bases, and SaaS platforms and use a data driven approach to know for certain that your vector database is optimized to achieve optimal relevancy and performance for your data.

Use metadata filtering

Especially as the volume of vectors in your vector database grows, it will be increasingly important for you to think about the metadata fields you’ll want to filter on. This has the effect of narrowing down the vector set where you’ll perform your similarity search and in turn improves the performance time of your ANN searches.

Vectorize has powerful metadata detection and automation capabilities that greatly simplifies the process of capturing, collecting, and organizing your metadata in your vector database.

Conclusion

Vector databases are an essential component for organizations preparing their data strategy to support generative AI. As machine learning continues to evolve and as we increasingly rely on generative AI to accelerate almost every job in every business, having the right data infrastructure is of paramount importance. This of course includes picking the right vector database but also the data engineering and ongoing management capabilities that surround it. For any organization who doesn’t want to get left behind as competitors use generative AI to disrupt industries, vector databases and vector data management must be considered a top priority.