Build AI Agents That Actually Learn

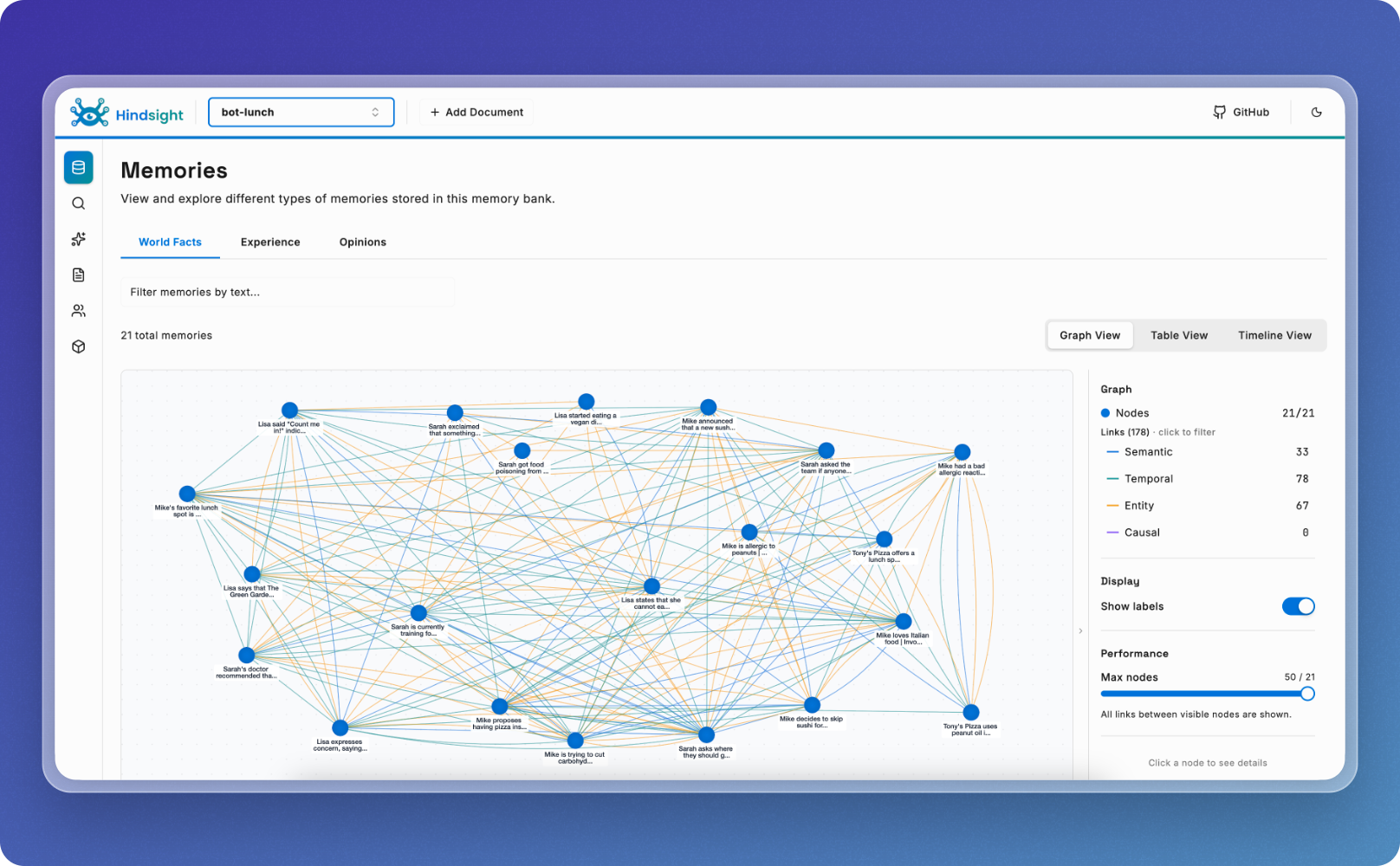

Most agent memory systems are just search. Hindsight gives your agents real memory: the ability to retain what they learn, recall it when relevant, and reflect on experience to form new understanding. Not within a single session, but across weeks and months.